Unit tests

Introduction to unit tests

A unit test is a way of testing one single unit of software. A unit is the smallest piece of code that can be logically isolated in a system. The purpose is to validate that each unit of the software performs as designed. In most programming languages, it is a function, a subroutine, or a method. In the Software Factory, you can write unit tests for the following logic concepts:

- Defaults

- Layouts

- Contexts

- Badges

- Tasks

- Processes

- Subroutines (of type Procedure and Function) - The return value of functions can be Scalar or Table. The setup of scalar- and table-valued functions is similar, except that the unit test code of table-valued functions is assembled differently and executed in the background. This type of unit test does not show the function's output directly. Instead, the outcome must be verified using an assertion query. To enable an assertion query, see the procedure to create a unit test.

- Insert, delete, and update statements.

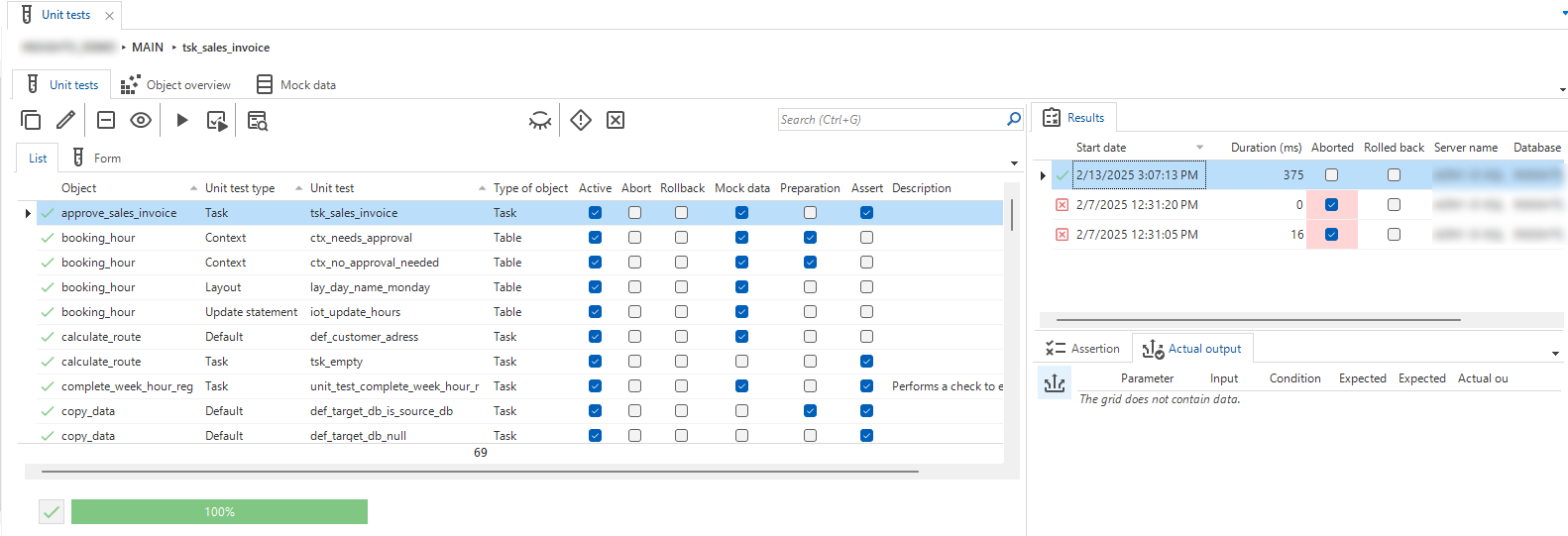

The image below shows the unit test screen. This is the place to create, maintain and execute unit tests.

Unit tests

Unit tests

Set up a unit test

Create a unit test

Tab Object overview shows all the objects for which you can create unit tests. For each object, a suffix indicates the number of active unit tests.

To create a unit test:

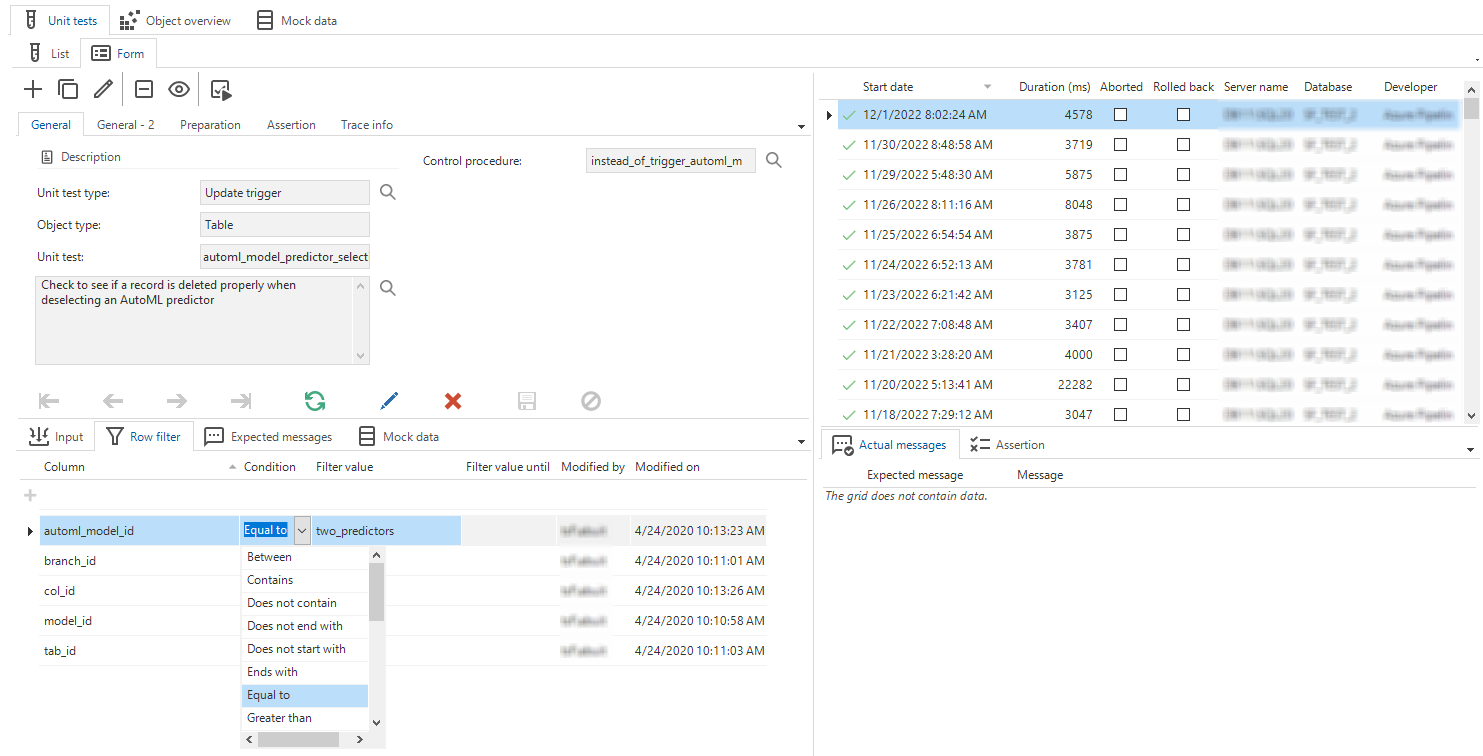

menu Quality > Unit tests > tab Form

- Enter a name for the test in the field Unit test.

- Select a Unit test type.

Note that if you select Insert/Update/Delete statement, the Software Factory automatically adds input parameters for mandatory columns without a specified default value, except for identity columns. - Fill in at least the other mandatory fields. Which fields are requested or mandatory depends on the selected unit test type and object type.

- Optional. Select a control procedure. See also Control procedure in a unit test.

- If the unit test should abort if it does not succeed, select the Should abort checkbox.

- If the code should do a rollback if an error occurs, select the Should rollback checkbox.

- A preparation query can be used, for example, to influence parameter values. In tab Preparation, check the Use prepare query box to enable the query field.

- You can test the outcome of a unit test with parameters. See Add parameters to a unit test. If no output is available, you can verify the outcome with an assertion query, for example, for specific tasks and statements. The output parameters for a unit test are also available in the assertion query. In tab Assertion, select the Use assertion query checkbox to enable the assertion query field. This field is filled with an example query by default, showing how the assertion result can be thrown when certain conditions are met.

- Test your unit test with task Execute selected unit tests

.

You can use the enrichment Generate unit test with AI to speed up the process of creating unit tests.

Example of an assertion query

Add parameters to a unit test

menu Quality > Unit tests > tab Form

It is possible to add parameters to a unit test. A distinction is made between input parameters, output parameters, and row filters.

- The row filters are used in the 'where' clause when testing update or delete statements.

- The output parameters will be checked after the unit test has been executed.

- The input parameters provide initial values that are used by the unit test. Make sure to add values to any input parameters that were generated automatically when you created the unit test.

When specific data is required to meet certain conditions in the code, the best practice is not to rely on live data. Instead, you should use data sets as mock data. In this way, the necessary data is always available for the test. See Mock data in a unit test.

Row filter in a unit test

Row filter in a unit test

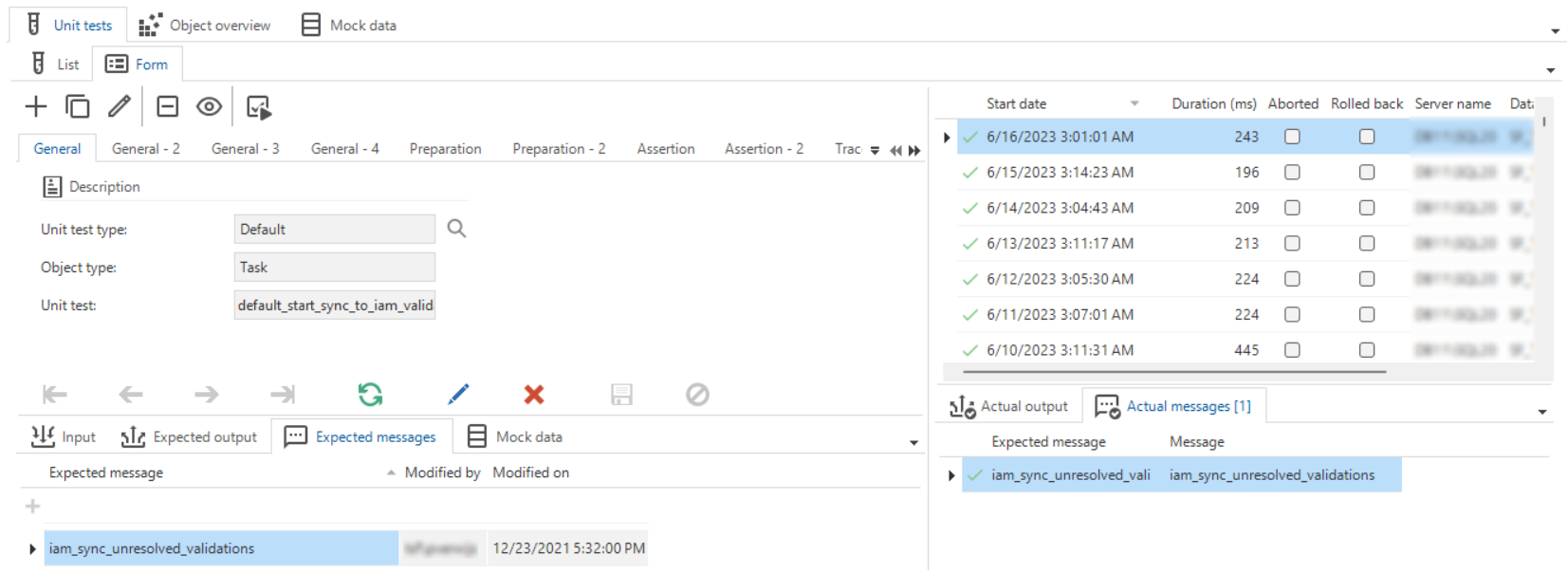

Add expected messages to a unit test

menu Quality > Unit tests > tab Form

As well as setting up the parameters used to execute the unit test, you can also specify the expected messages. These are also checked when a unit test is run, to verify that the correct messages are returned from the database when a procedure is executed.

For example, if a default procedure is supposed to throw a warning if an invalid value is entered, then this warning message can be specified as an expected message. If the unit test is executed with the invalid value, this message should also appear in the Actual messages tab.

If a database errors occurs, no expected message will be returned. This can be prevented using,

for example, IF-ELSE statements that validate any part of the following statements that could cause a database error.

If there is a variable that passes its value on to a mandatory column, make sure that this variable has a value.

Expected message in a unit test

Expected message in a unit test

Control procedure in a unit test

To clarify which functionality is covered by a unit test, you can select a control procedure when you create a unit test or at a later moment.

To select one at a later moment:

menu Quality > Unit tests > tab Form

-

In the field Control procedure, select a control procedure.

-

Use the task Go to control procedure in Functionality

(Alt + C) to view the control procedure in the Functionality screen in, for example, the following situations:

- When you are creating a unit test:

- Check what the code does

- Find which parameter you need to add as expected output

- When you are inspecting a failed unit test (see also Fix a failed unit test):

- View the context

- Check whether maintenance is necessary

- When you are creating a unit test:

Mock data in a unit test

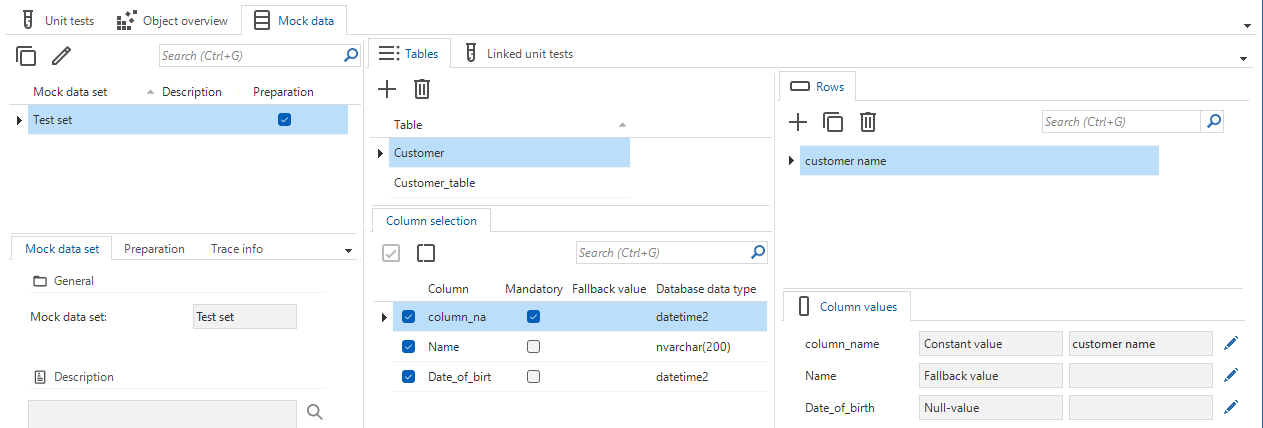

Set up a mock data set for a unit test

Mock data sets are collections of data that can be used for testing purposes. The data in a mock data set only exists in the context of your unit test and can be based on either a table or a view. For more information about how this mock data is created, see Mock data for a table or view.

Every time you run a unit test, the data from your defined mock data set is added to a transaction. This transaction is rolled back at the end, which means the data will never actually be added to your database.

menu Quality > Unit tests > tab Mock data

-

In the tab Mock data set, add

a Mock data set.

-

In the tab Tables, add

one or more tables.

-

In the tab Column selection, select

columns for input values. You can also view the fallback values, mandatory status at the data model level, and data type for each column. Deselected

columns will not be available as rows and column values.

Make sure to take the following rules into account:

- Always select primary key columns, except for identities.

- You cannot select calculated columns. If a selected column becomes calculated due to a data model change, unit tests using this mock data set will fail, and the column will be highlighted in red.

- Only columns with a default value (query) can have a fallback value (i.e. the default value) in the mock data This ensures a limited set of columns can still be used. The fallback value is available in the overview of available columns.

Oracle

In Oracle, mock data never uses fallback values.

Instead, mocked tables do not have any mandatory non-key columns for the duration of the unit test.

All non-key columns without a configured value or set to fallback value will be null.

-

In the tab Rows, add

multiple mocked data rows as data for each table. You can also copy

mocked data rows here. If no rows are specified, the table will contain 0 records. It is not necessary to take foreign key constraints into account.

-

In the tab Column values, add the input values for the unit test. They do not need to exist in the data set, it depends on what you want to test. For example, if you want to test a business rule to prevent a user from adding a duplicate value, you can add an input value that is already in the data set.

The input field remains available regardless of the choice for easy data entry. Clearing a value will set the combo to Null-value, and providing a value will set it to either Constant value or Expression, depending on whether the input field or an IDE is used. You can select one of the following options to specify a column value:

- Constant value

- Expression

- Null-value - A unit test will fail if Null-value is used for a mandatory column or if a row uses it for a column that became mandatory due to a data model change. The entry will be highlighted in red as a warning.

- Fallback value - A unit test will fail if Fallback value is used for a non-identity key column or if a row uses it for a column that became a key column. The entry will be highlighted in red as a warning.

Changing column values does not refresh the entire document after every update. Perform a manual refresh to see your changes.

Mock data sets

Mock data sets

Mock data for a table or view

When a unit test is executed and mock data has been set up for a table, a mock table is created in the database. This mock table contains the mock data records as they have been set up in the mock data set. After the unit test has been executed, the mock table is dropped.

Setting up mock data for views within the context of the Software Factory works in the same way. However, these 'mock views' are created differently from the tables when the unit test is executed, because views do not actually contain any physical data.

Oracle Mock data for views is not yet supported for Oracle.

The unit test code for mock views is updated as follows:

-

In the context of the unit test transaction:

- The original view will be dropped.

- The view will be re-created as a table with the same column definitions (identities, mandatory, default values) that it has in the Software Factory's data model.

- The mock data rows, as set in the Software Factory, will be inserted into the just-created table.

-

After the unit test has been run:

- The original view will be restored, including its original triggers.

- The table that was created in its place will no longer exist.

- The data in the view will return to the data that is returned by the logic on which the view is based.

To see the effect of adding a view, execute the task Show unit test code .

Add a preparation query to a mock data set

With a preparation query, you can programmatically enhance mock data or other database objects such as views or functions via alter-statements. When running a unit test, the preparation query for a mock data set will be executed after all the mock tables have been prepared, and before the preparation for the unit test itself is executed.

menu Quality > Unit tests > tab Mock data

- In form Preparation, select

Use preparation query. A text entry field appears.

- Edit the preparation query in the form directly, or click

to edit it in your external editor.

- Save your preparation query.

Add a mock data set to a unit test

First, create a data set, then you can add it to one or more unit tests.

menu Quality > Unit tests > tab Mock data

- In tab List, select a unit test.

- Navigate to tab Form > tab Mock data.

- Select your mock data set directly or Import mock data

from a specific unit test.

- Optional. Execute the task Go to mock data

to inspect or maintain the selected mock data set. See also Go to a related screen for an object.

Mock data in a unit test

Run a unit test

The tab List shows an overview of all the unit tests for the selected branch.

Oracle In Oracle, when you run a unit test, triggers and constraints are disabled on mocked tables. This allows the real data to be temporarily removed and the mock data to be inserted. Due to technical limitations, the triggers cannot be re-enabled for the duration of the subsequent unit test. This may limit your options when attempting to unit test insert-, update-, or delete statements.

menu Quality > Unit tests > tab List or Form

-

Select one or multiple unit tests.

-

Run the tests:

Execute all unit tests - Runs all active unit tests.

Execute selected unit tests - Runs all selected active unit tests.

Unit test results

menu Quality > Unit tests > tab List/Form

The tab in the upper-right corner of the screen lists the results of all executed unit tests.

This information includes details such as the duration of the unit test, the date, the server, and the tester's identity. You can specify the retention period for this information through the setting Unit test retention (in days) found in the menu Maintenance > Configuration.

Fix a failed unit test

menu Quality > Unit tests > tab List or Form

Use the prefilters to show only unit tests that need attention:

- Warning and failed

(ALT+W) - only show unit tests with a warning and failed tests.

- Failed

(ALT+F) - only show failed unit tests.

A unit test can fail for several reasons.

-

To solve a design problem:

- Check the settings (like, Should abort or Should rollback)

- Check the input parameters

- Check the output parameters and expected messages

- If configured, check the Preparation query and Assertion query

- If configured, check the mock data set

- Check the unit test code manually

-

To solve a mock data set problem:

- Have the right Tables been included?

- Have the right Columns been included?

- Do the Columns contains the right Column values?

- If configured, check the Preparation query

-

To solve a database problem:

- Verify that the tested functionality is available on the database

- Check that you selected the correct runtime configuration

- If the runtime configuration is correct, check its contents like the database

If the unit test design, the mock data set, and the database are correct, the problem is in the functionality you are testing. This is, of course, the purpose of the unit test.

- To solve a problem in the functionality:

- Check the control procedure. Select the task Go to control procedure in Functionality

. See also Control procedure in a unit test.

- Check the control procedure. Select the task Go to control procedure in Functionality

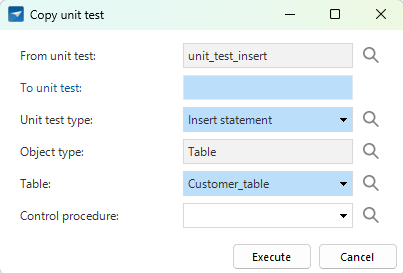

Copy a unit test

You can copy a unit test to create a similar unit test with different settings. For example, for unit test types, you can copy from an insert handler to an insert statement, or copy from a default to a layout. For object types, for example, you can copy a default from a table to a task.

The following data is included in the copy:

- Always: All data from the unit test form, including the assertion and preparation queries, expected message, and linked mock data.

- All input and output parameters that still apply when copying from one unit test type to another.

The following data is not included in the copy:

- Input parameters when copying from one object type to another.

- The mandatory parameters for tasks, insert handlers, and insert statements are not generated.

To copy a unit test:

menu Quality > Unit tests

-

Select the unit test you want to copy.

-

Execute the task Copy unit test

.

-

In the pop-up, configure the following options:

- To unit test

- Unit test type

- Object type

- Object (based on selected object type)

- Control procedure

For more information on these options, see Create a unit test.

-

Select Execute.

Copy a unit test

Copy a unit test

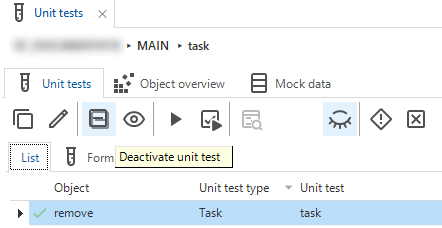

(De)activate a unit test

menu Quality > Unit tests > tab List

Sometimes, a unit test is temporarily not necessary and should not be executed.

- To deactivate a unit test, select the unit test and execute the

Deactivate unit test task.

- Use the prefilter Hide inactive unit tests

to hide or display the deactivated unit tests.

- To reactivate an inactive unit test, select the unit test and execute the Activate unit test

task.

Deactivate a unit test

Deactivate a unit test

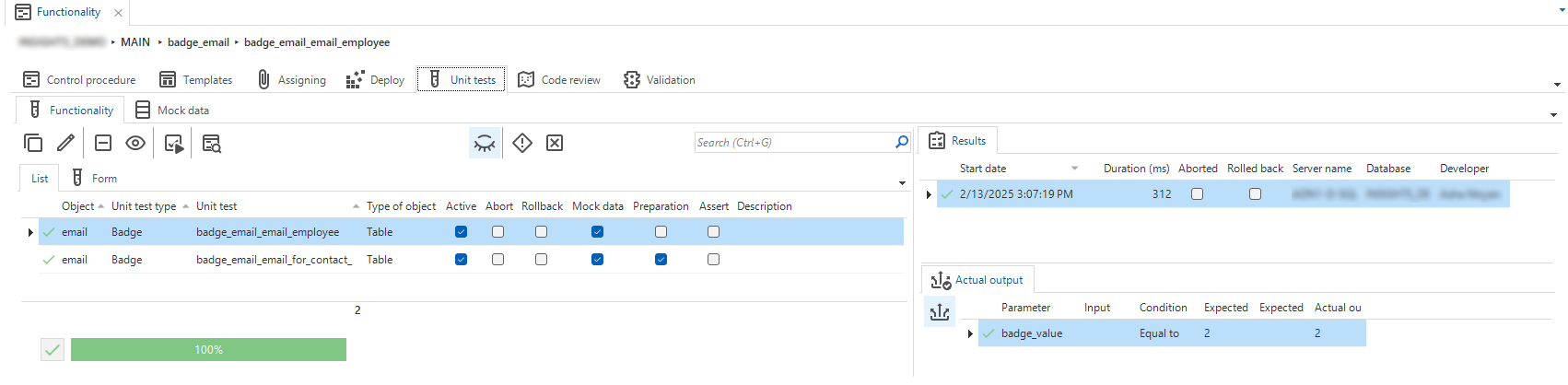

Unit tests in the Functionality modeler

Unit tests can also be created, maintained, or executed from the Functionality modeler.

menu Business logic > Functionality > tab Unit tests

The list shows all the unit tests directly connected with the program objects on the result page. For example, when a default procedure is created for Employee to combine the first and last name to display a name, the written unit test that validates the email address is also shown in the list.

Also, all unit tests are shown that have the selected control procedure linked as primarily.

Unit tests in the Functionality modeler

Unit tests in the Functionality modeler

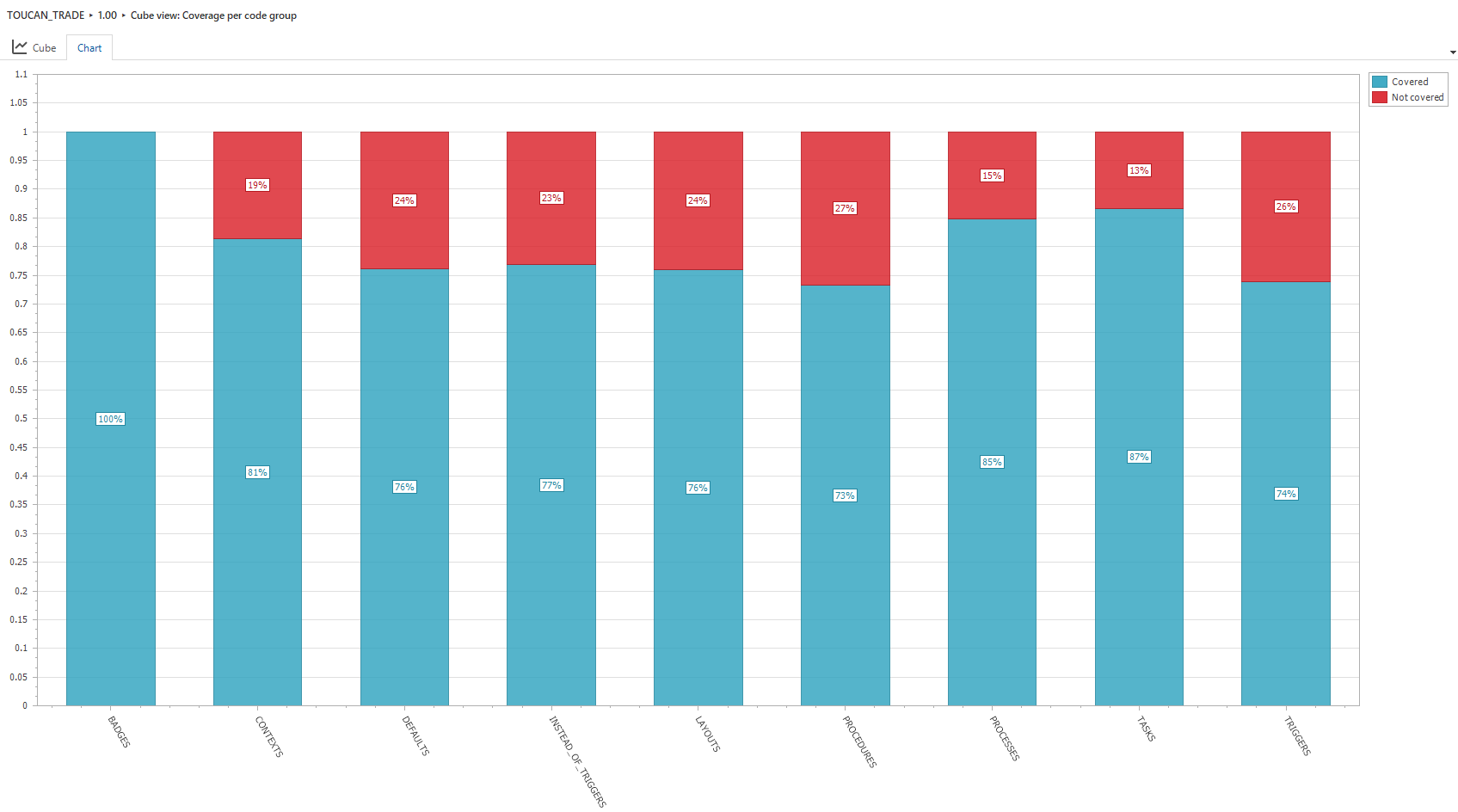

Unit test analysis

The Analysis menu group in the Software Factory provides three cubes to monitor the unit test runs and coverage.

The cubes for unit test coverage rely on the presence of program objects in the model, so ensure that the model definition is generated.

Unit test runs

menu Analysis > Unit test runs

This cube shows the number of runs for each unit test and their duration.

Unit test control procedure coverage

menu Analysis > Unit test control procedure coverage

This cube shows the ratio of control procedures covered by a unit test. Only control procedures of code groups that allow for unit tests are measured.

Three cube views are available:

- Coverage per code group

- Coverage per developer

- General coverage

Coverage per code group

Coverage per code group

The coverage status is determined as follows. A control procedure can be either:

- Covered - A program object item created by the control procedure is present in a program object involved directly in any active unit test.

- Covered (linked only) - The control procedure is linked to an active unit test, but the program object items of this control procedure are not in any program object directly involved in an active unit test.

- Not covered - The program object items created by this control procedure are part of program objects that are not directly involved in any unit test, nor is the control procedure linked to an active unit test.

Unit test program object coverage

menu Analysis > Unit test program object coverage

This cube is similar to the control procedure coverage cube but measures the number of program objects instead. Only program objects of code groups that can have unit tests are measured.

Two cube views are available:

- Coverage per code group

- General coverage.

The coverage for program objects is generally lower than the coverage for control procedures, as a dynamically assigned control procedure might not have a unit test on every program object where it is assigned.